Normal Mapping

<Click Me For Origin>

All our scenes are filled with polygons, each consisting of hundreds or maybe thousands of flat triangles. We boosted the realism by pasting 2D textures on these flat triangles to give them extra details, hiding the fact that the polygons actually consist of tiny flat triangles. Textures help, but when you take a good close look at them it is still quite easy to see the underlying flat surfaces. Most real-life surface are not flat however and exhibit a lot of (bumpy) details.

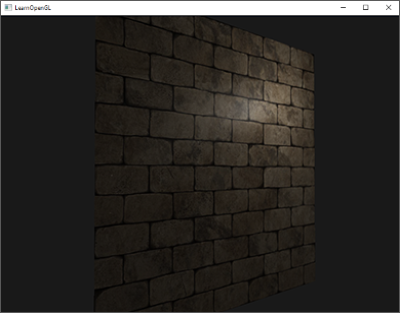

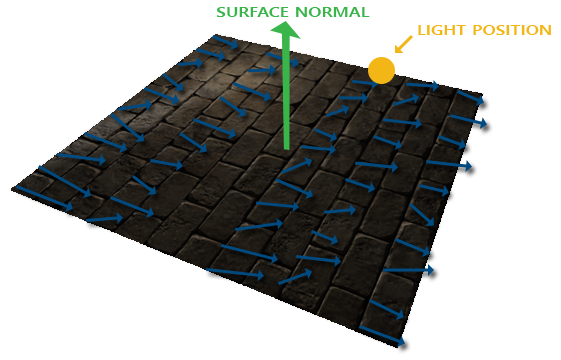

For instance, take a brick surface. A brick surface is quite a rough surface and obviously not completely flat: it contains sunken cement stripes and a lot of detailed little holes and cracks. If we were to view such a brick surface in a lighted scene the immersion gets easily broken. Below we can see a brick texture applied to a flat surface lighted by a point light.

The lighting does not take any of the small cracks and holes into account and completely ignores the deep stripes between the bricks; the surface looks perfectly flat.

- We can partly solve the flatness by using a specular map to pretend some surfaces are less lit due to depth or other details, but that's more of a hack than a real solution.

- What we need is some way to inform the lighting system about all the little depth-like details of the surface.

- If we think about this from a light's perspective: how comes the surface is lit as a completely flat surface? The answer is the surface's normal vector. From the lighting algorithm's point of view the only way it determines the shape of an object is by its perpendicular normal vector.

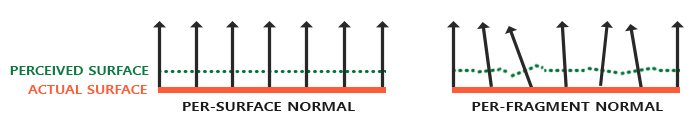

- The brick surface only has a single normal vector and as a result the surface is uniformly lit based on this normal vector's direction. What if we, instead of a per-surface normal that is the same for each fragment, use a per-fragment normal that is different for each fragment? This way we can slightly deviate the normal vector based on a surface's little details; as a result this gives the illusion the surface is a lot more complex:

By using per-fragment normals we can trick the lighting into believing a surface consists of tiny little planes (perpendicular to the normal vectors) giving the surface an enormous boost in detail. This technique to use per-fragment normals compared to per-surface normals is called normal mapping or bump mapping . Applied to the brick plane it looks a bit like this:

As you can see it gives an enormous boost in detail and for a relatively low cost. Because we only change the normal vectors per fragment there is no need to change any lighting equation. We now pass a per-fragment normal instead of an interpolated surface normal to the lighting algorithm. The lighting is then what gives a surface its detail.

Normal mapping

To get normal mapping to work we're going to need a per-fragment normal.

- Similar to what we did with diffuse maps and specular maps we can use a 2D texture to store per-fragment data.

- Aside from color and lighting data we can also store normal vectors in a 2D texture. This way we can sample from a 2D texture to get a normal vector for that specific fragment.

- While normal vectors are geometric entities and textures are generally only used for color ,information storing normal vectors in a texture might not be immediately obvious.

- If you think about color vectors in a texture they are represented as a 3D vector with an

r,gandbcomponent. We can similarly store a normal vector'sx,yandzcomponent in the respective color components. Normal vectors range between-1and1so they're first mapped to [0,1]:

vec3 rgb_normal = normal * 0.5 + 0.5; // transforms from [-1,1] to [0,1]

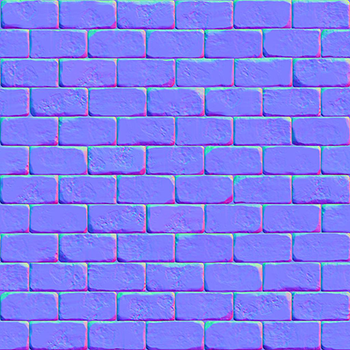

- With normal vectors transformed to an RGB color component like this we can store a per-fragment normal derived from the shape of a surface onto a 2D texture. An example

normal map of the brick surface at the start of this tutorial is shown below:

This (and almost all normal maps you find online) will have a blue-ish tint.

This is because all the normals are all closely pointing outwards towards the positive z-axis which is : a blue-ish color. The slight deviations in color represent normal vectors that are slightly offset from the general positive z direction, giving a sense of depth to the texture. For example, you can see that at the top of each brick the color tends to get more green which makes sense as the top side of a brick would have normals pointing more in the positive y direction which happens to be the color green!

With a simple plane looking at the positive z-axis we can take this diffuse texture and this normal map to render the image from the previous section. Note that the linked normal map is different from the one shown above. The reason for this is that OpenGL reads texture coordinates with the y (or V) coordinates reversed from how textures are generally created. The linked normal map thus has its y (or green) component reversed (you can see the green colors are now pointing downwards); if you fail to take this into account the lighting will be incorrect. Load both textures, bind them to the proper texture units and render a plane with the following changes in a lighting fragment shader:

uniform sampler2D normalMap;

void main()

{

// Obtain normal from normal map in range [0,1]

normal = texture(normalMap, fs_in.TexCoords).rgb;

// Transform normal vector to range [-1,1]

normal = normalize(normal * 2.0 - 1.0);

[...]

// proceed with lighting as normal

}

Here we reverse the process of mapping normals to RGB colors by remapping the sampled normal color from [

0,1] back to [-1,1] and then use the sampled normal vectors for the upcoming lighting calculations. In this case we used a Blinn-Phong shader.

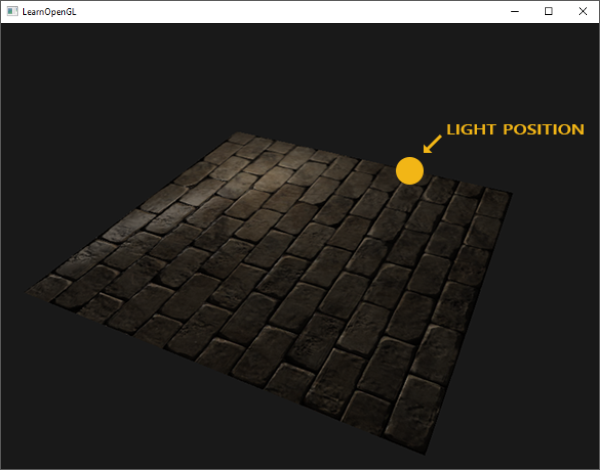

By slowly moving the light source over time you really get a sense of depth using the normal map. Running this normal mapping example gives the exact results as shown at the start of this tutorial:

You can find the full source code of this simple demo here together with its vertex and fragment shader.

There is one issue however that greatly limits this use of normal maps.

- The normal map we used had normal vectors that all roughly pointed in the positive z direction.

- This worked because the plane's surface normal was also pointing in the positive z direction.

- However, what would happen if we used the same normal map on a plane laying on the ground with a surface normal vector pointing in the positive y direction?

The lighting doesn't look right! This happens because the sampled normals of this plane still point roughly in the positive z direction even though they should point somewhat in the positive y direction of the surface normal.

As a result the lighting thinks the surface's normals are the same as before when the surface was still looking in the positive z direction; the lighting is incorrect. The image below shows what the sampled normals approximately look like on this surface:

You can see that all the normals roughly point in the positive z direction while they should be pointing alongside the surface normal in the positive y direction.

- A possible solution to this problem is to define a normal map for each possible direction of a surface. In the case of a cube we would need 6 normal maps, but with advanced models that can have more than hundreds of possible surface directions this becomes an infeasible approach.

- A different and also slightly more difficult solution works by doing lighting in a different coordinate space: a coordinate space where the normal map vectors always point roughly in the positive z direction; all other lighting vectors are then transformed relative to this positive z direction.

- This way we can always use the same normal map, regardless of orientation. This coordinate space is called

tangent space .

Tangent space

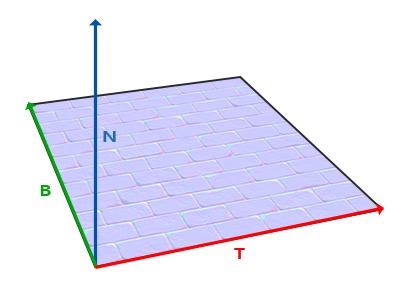

Normal vectors in a normal map are expressed in tangent space where normals always point roughly in the positive z direction.

- Tangent space is a space that's local to the surface of a triangle: the normals are relative to the local reference frame of the individual triangles. Think of it as the local space of the normal map's vectors; they're all defined pointing in the positive z direction regardless of the final transformed direction.

- Using a specific matrix we can then transform normal vectors from this local tangent space to world or view coordinates, orienting them along the final mapped surface's direction.

Let's say we have the incorrect normal mapped surface from the previous section looking in the positive y direction.

- The normal map is defined in tangent space, so one way to solve the problem is to calculate a matrix to transform normals from tangent space to a different space such that they're aligned with the surface's normal direction: the normal vectors are then all pointing roughly in the positive y direction.

- The great thing about tangent space is that we can calculate such a matrix for any type of surface so that we can properly align the tangent space's z direction to the surface's normal direction.

- These are the vectors we need to construct this matrix.

- To construct such a change-of-basis matrix that transforms a tangent-space vector to a different coordinate space we need three perpendicular vectors that are aligned along the surface of a normal map: an up, right and forward vector; similar to what we did in the camera tutorial.

- We already know the up vector which is the surface's normal vector. (???)

- The right and forward vector are the tangent and bitangent vector respectively. The following image of a surface shows all three vectors on a surface:

- Calculating the tangent and bitangent vectors is not as straightforward as the normal vector. We can see from the image that the direction of the normal map's tangent and bitangent vector align with the direction in which we define a surface's texture coordinates.

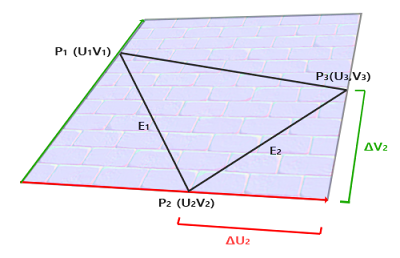

- We'll use this fact to calculate tangent and bitangent vectors for each surface. Retrieving them does require a bit of math; take a look at the following image:

From the image we can see that the texture coordinate differences of an edge of a triangle denotes as and are expressed in the same direction as the tangent vector and bitangent vector . Because of this we can write both displayed edges and of the triangle as a linear combination of the tangent vector and the bitangent vector :

Which we can also write as:

We can calculate as the difference vector between two vector positions and and as the texture coordinate differences. We're then left with two unknowns (tangent and bitangent ) and two equations. You might remember from your algebra classes that this allows us to solve for and .

The last equations allow us to write it in a different form: that of matrix multiplication:

Try to visualize the matrix multiplications in your head and confirm that this is indeed the same equation. An advantage of rewriting the equations in matrix form is that solving for and becomes much more obvious. If we multiply both sides of the equations by the inverse of the matrix we get:

This allows us to solve for and . This does require us to calculate the inverse of the delta texture coordinate matrix. I won't go into the mathematical details of calculating a matrix' inverse, but it roughly translates to 1 over the determinant of the matrix multiplied by its adjugate matrix:

This final equation gives us a formula for calculating the tangent vector and bitangent vector from a triangle's two edges and its texture coordinates.

Don't worry if you don't really understand the mathematics behind this. As long as you understand that we can calculate tangents and bitangents from a triangle's vertices and its texture coordinates (since texture coordinates are in the same space as tangent vectors) you're halfway there.

_____________________________//TO DO

No comments:

Post a Comment